flowchart TD

A["Code Submission"] --> B["SonarQube Analysis<br/>(CWE mapping)"]

B --> C["LLM Feedback<br/>(Level-appropriate)"]

C --> D["Student View &<br/>Instructor Reports"]

Pedalogical

AI-Grounded Vulnerability Feedback for Non‑Security CS Courses

TL;DR

We propose a new tool and pedagogical approach to improve cybersecurity education.

Problem & Motivation

We Need to Improve Education in Developing Secure Code

Security failures start early.

Students often learn to write code before they learn to write secure code.

- Software vulnerability exploitation remains a leading vector in breaches; secure coding must be integrated early [1], [2], [3].

- Teaching students to use static analyzers early is important, but is very difficult due to the complexity of the output of these tools.

- Existing static analyzers flag issues but rarely deliver actionable, level-appropriate pedagogy [4], [5].

Prior Findings: What We Know So Far

Empirical evidence supports this gap.

- Vulnerabilities increase and diversify as students progress from CS1 → advanced courses [6].

- Many CS programs lack sustained, program-wide security practice; students introduce vulnerabilities in routine coursework [6], [7], [8].

- Mismatch between vulnerabilities students actually produce and those emphasized in detection research [8], [9].

- Time pressure & functionality-first norms drive insecure patterns; targeted feedback can help [7], [9].

Research Gap

Proposed Approach

Pedalogical

System Pipeline

Grounded analyzer reduces hallucination risk; LLM provides audience-appropriate feedback.

Pedagogical Learning Theories

Integrates proven learning theories into the system design to enhance the learning experience:

Pedalogical Application

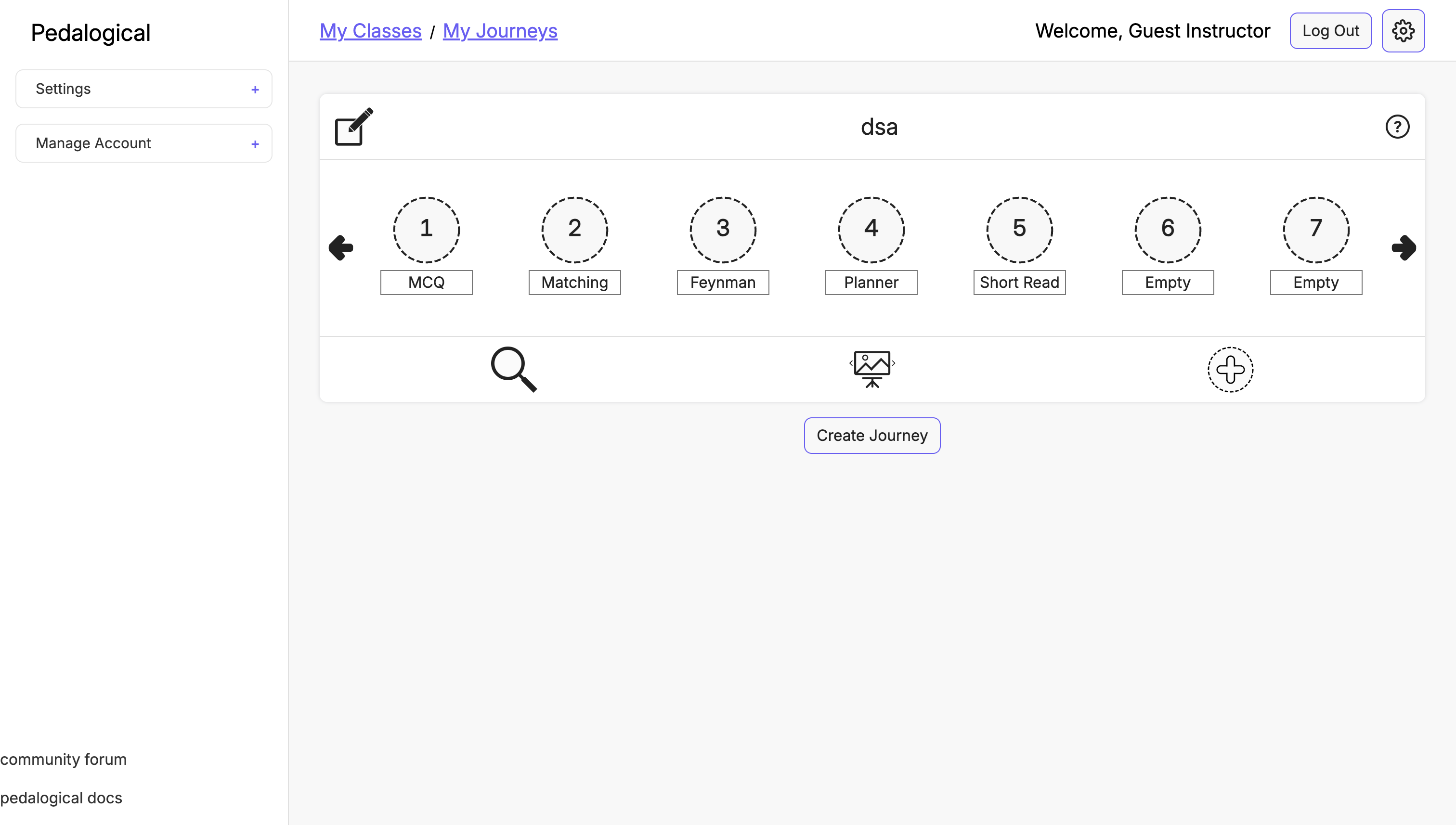

Figure: Pedalogical Application

Pedalogical Question Nodes

Figure: Pedalogical Question Nodes

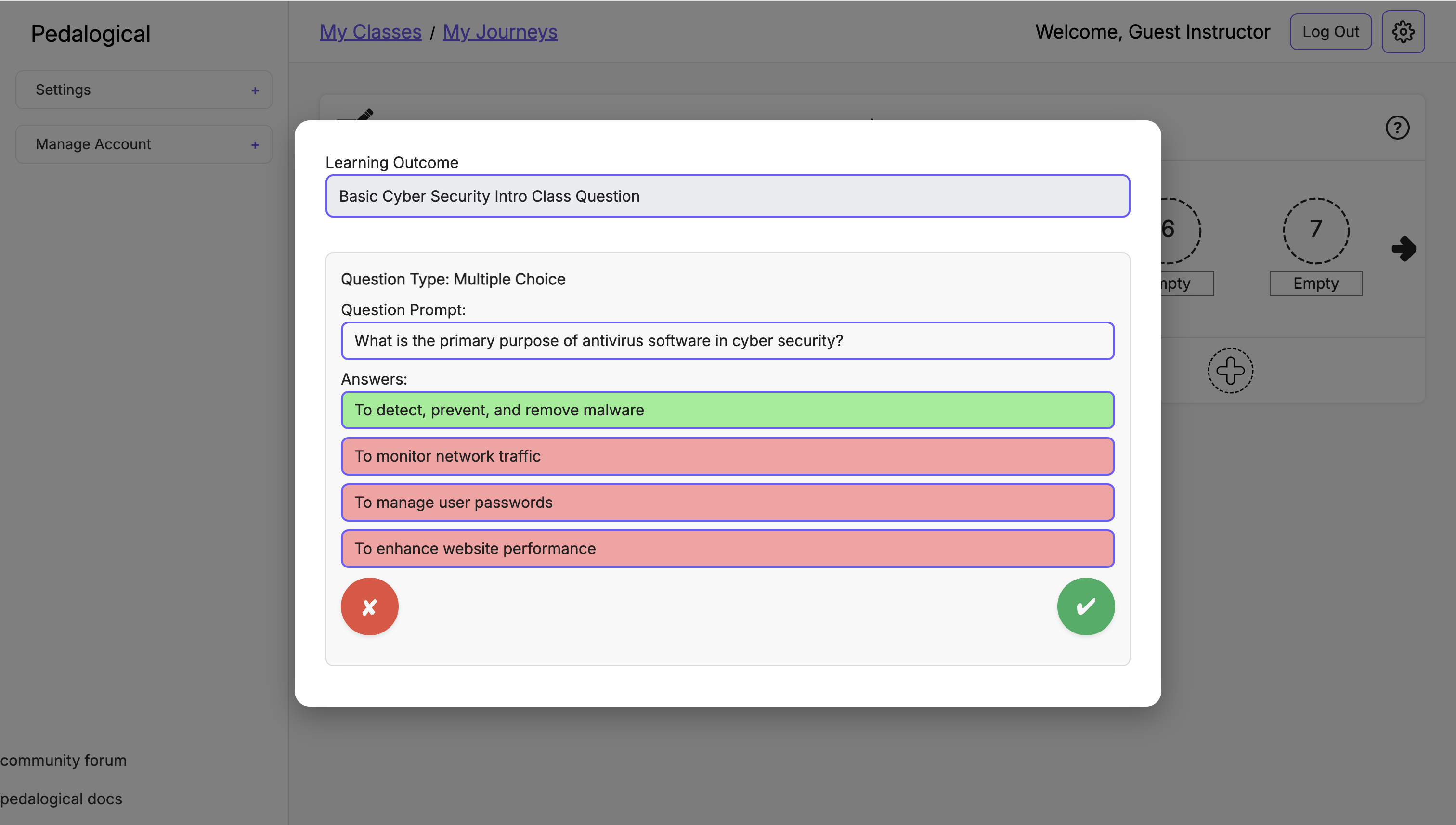

Pedalogical LLM Question Generation

Figure: Pedalogical LLM Question Generation

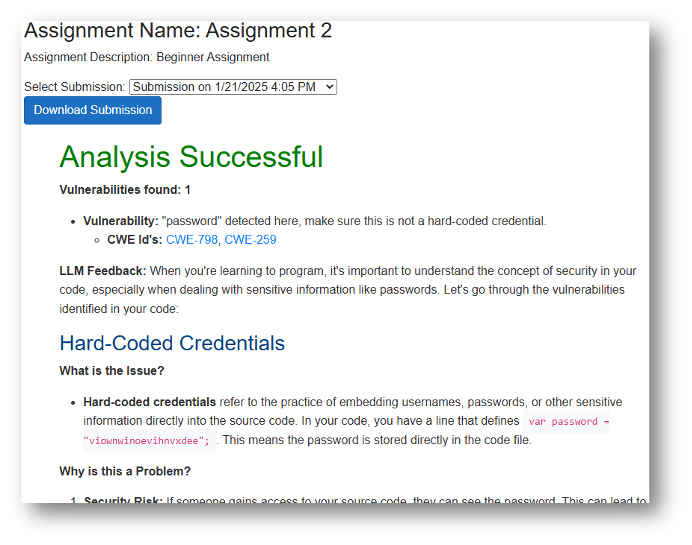

Cybersecurity: Sample Student Feedback

Figure: Sample Student Feedback

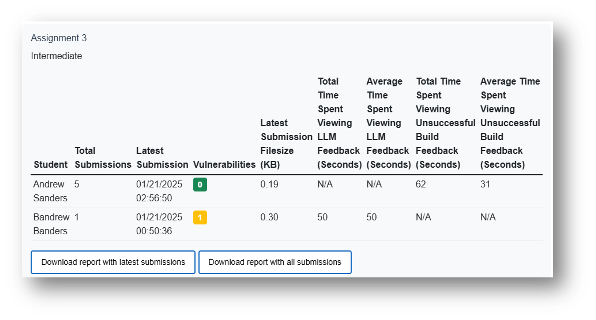

What Instructors Get

- Cohort dashboard: per-assignment vulnerability counts & trends.

- Downloadable reports: analyzer findings, prompts/responses, submission diffs.

- Configurable feedback detail level for scaffolding.

Sample Instructor Report

Figure: Sample Instructor Report

Proposed Study Context

Research Questions

RQ1: Is AI-generated vulnerability feedback associated with reduced vulnerabilities in revised submissions?

RQ2: Does exposure to AI-generated vulnerability feedback improve secure coding practices over time?

Proposed Study Context

- Multi-course, multi-institutional.

- Undergraduate courses (intro → advanced; multiple sections; in-person & online).

- Incentivized via bonus points contingent on meeting minimum functionality and reducing vulnerabilities.

Data & Measures

- Static analysis artifacts: CWE-tagged vulnerabilities, security hotspots, bugs, code smells.

- Engagement telemetry: time on feedback, resubmission frequency, interaction with explanations.

- Learning signals: pre/post patterns across assignments; regression models of engagement → reduction.

- Qualitative: end-of-semester survey on usefulness & strategies.

Analysis Plan

- Longitudinal within-course and cross-course comparisons.

- Regression modeling: engagement metrics → vulnerability change.

- Category-level success: which CWE types improve most?

- Sensitivity to bonus-point variability across courses (limitations acknowledged).

Expected Contributions

- A replicable pipeline for secure-coding feedback integrated into non-security courses.

- Evidence that grounded LLM feedback can reduce vulnerabilities and shape habits .

- A platform for program-level learning analytics on secure coding.

Anticipated Threats & Limitations

- Bonus-point schemes differ across courses → potential confounds.

- Structured prompts mitigate LLM variability, but do not eliminate it [12].

Encouraging Early Analysis

Pedalogical in a CS2 (Data Structures) Course

- Students designed and selected data structures for a medium-sized programming project.

- Experimental group used the Pedalogical chatbot for guided reasoning and scaffolding, while the control group used a generic ChatGPT-4.0 wrapper, enabling comparison of design quality, reasoning depth, and tool engagement.

- Students in the experimental group performed significantly better on project outcomes, suggesting increased metacognitive awareness and problem-solving strategies based on rubric-based grading.

Call to Action

- Adopt Pedalogical in non-security courses to normalize secure coding.

- Collaborate on cross-institutional studies and shared analytics.

- Extend to additional languages & rulesets; explore adaptive feedback policies.

Thank You!

Contact Information